Last night I was glad to participate in the local author’s event of the Chiswick Book Festival, and I’m excited to have been included on their prestigious list of local authors from the place I call home. The list reaches back to Alexander Pope in 1688, and includes authors like WB Yeats, EM Forster, Anthony Burgess, Iris Murdoch, Eric Morecambe, JG Ballard, Harold Pinter, Lynne Redgrave, and Roger Daltry (yes, of The Who, and he did write a book, too). And now I am some tiny part of perhaps the most literary neighbourhood in London. Who’d a thunkit?

Come see me talk about Rage at Hay Hill in London on Wednesday Sept. 11 /

There are still tickets available for the talk I’m giving at 12 Hay Hill on Sept. 11th. I previously listed this event as closed to the public, but I was wrong! Hope to see some of you there?

Tickets (with a glass of wine when you walk in) are 15 pounds, but for 30, you also get a copy of the book, which I’ll glad sign!

USAToday OpEd: My social media feeds look different from yours and it's driving political polarization /

Angela Saini on Rage /

Very pleased at these comments from a writer I very much admire, Angela Saini. If you’ve yet to read her fantastic work, check out Inferior: How Science Got Women Wrong-and the New Research That's Rewriting the Story, which I feel is now a classic, and her latest, work Superior: The Return of Race Science, which covers some ground in common with Rage, in a different and important manner.

HM Chief Science Advisor on National Security Praises My Book, Rage Inside the Machine /

Over the moon that my old boss from UCL Computer Science, Anthony Finkelstein CBE FREng, who is now UK Chief Science Advisor on National Security finished Rage, and said “Rob Smith has written an important book that looks at AI in a careful and balanced way, combining wit and scholarship…I learnt a lot.” Full quote here.

Review of Rage at BoingBoing (Again)! /

In honour of the USA publication of Rage, Cory Doctorow has posted a review of the book at BoingBoing again. Amongst other things, he sez:

“This is a vital addition to the debate on algorithmic decision-making, machine learning, and late-stage platform capitalism, and it's got important things to say about what makes us human, what our computers can do to enhance our lives, and how to to have a critical discourse about algorithms that does not subordinate human ethics to statistical convenience.”

Thanks so much Cory!

Video re-post for US/AU publication day! /

Publication Day (2): Rage Inside the Machine: The Prejudice of Algorithms, and How to Stop the Internet Making Bigots of Us All /

Excited to say that the book is how shipping in the USA and Australia. It’s been out for a couple of months in the UK, and the reviews and feedback at promotional events has been great. I know quite a few people have pre-ordered in the USA and Oz, so I really look forwarding to hearing people’s feedback from those countries soon. I really hope you all enjoy it, and that it sparks new, much-needed conversations about technology, and how it fits into our lives.

How can AI be used to drive diversity?: Canvas8 Interview /

Market research organisation Canvas8 has published a new piece called HOW CAN AI BE USED TO DRIVE DIVERSITY? that draws on a recent breakfast talk I gave to some of their members here in London. The piece features a few quotes from me and a slew of data about the current perceptions of AI. Some are frightening, like “67% of Britons say AIs should be able to report people if they engage in illegal activities.”

But I believe the article ends with some good recommendations based on what Canvas8 got out of my ideas. In particular, they note how recommendation engines generate pretty unsatisfactory results for consumers (and generate only 16 per cent of sales), and how diversity as a goal could improve those sales. This may seem like a small thing, but applications with diversity as their goal and an economic imperative could get the work done of developing tech that can better diversify algorithmic impacts on people more generally. And I’m all for that!

Are Algorithms Prejudiced? (6 minutes from my Talk At Google) /

For those of you who’d like to look at something shorter before watching the my hour-long contribution to Talks at Google (or better yet, before reading Rage), here’s a six minute excerpt that gives you a feel for the material.

My Talk@Google on Rage is now available online! /

I was lucky enough to be invited to give an hour-long talk on Rage at Google recently, and I’m glad to say it’s now online. Those at the talk seemed to really enjoy it, and the organiser called it “fantastic” and “an ML talk unlike any other ML talk.”

I’ll be excerpting it a bit later for those who want to get a shorter feel for what’s in the book via video.

The Curse of Meritocracy /

RSA has posted an excellent “minimate” video from the wonderful Michael Sandel, on how the (false) idea that the world is meritocratic - that people “get what they deserve” - leads to the social disfunction and populist politics of today, curses that we need to overcome. Highly recommended viewing.

There’s a subtle connection here to an idea in my book, Rage Inside the Machine. Around 56 seconds into the minimate, you’ll see a sketch of The Fates. In Rage, I talk about how the idea of these fateful figures, who actually worked above the Gods, and would respond to no plea or prayer, were abandoned as a concept with the widespread adoption of a single omniscient God, and the invention of probability theory in the Renaissance.

That development leads directly to the idea held by many AI technologists that if we just calculate the right set of statistics from big data, we can make the highest merit decisions about the future. But, this overlooks the fact that probability theory does not model the complexity of the real world, and never can.

So the abandonment of the concept of The Fates leads not only to the tyranny of meritocracy that Michael Sandel talks about, but also a “decision meritocracy” based on faith in big data algorithmics. This new faith overlooks the real-world uncertainty that non-probabilistic, human decision-making has been adapted specifically to address.

So it’s not just that we have a false idea of social merit that’s connected to our divisive world, it’s the idea that we can make decisions of inherently high merit based on algorithms and big data. We need to re-centre humanity in our decision-making and be more humane in accepting that much of our success and failure lies in the hands of Fate.

And I also recommend Sandel’s fantastic book What Money Can’t Buy: The Moral Limits of Markets.

Hurrah: Turing to be on the new 50 pound note /

Alan Turing, the man who created computer science, while simultaneously saving the entire world from fascism through his critical role in winning WWII, only to later be chemically castrated and driven to suicide as a punishment for the crime of homosexuality, is to finally be honoured by appearing on British currency, in particular the new 50 pound note.

My book, Rage, retells some stories about Turing, including a re-appraisal of his eponymous “test” (aka The Imitation Game), a casting of new light on how Universal Computation relates to human thought, and Turing’s little known role in evolutionary algorithms.

But all that’s by-the-by. Turing was a great hero, and bestowing this public honour on him is a small start at redressing the historic injustice he faced. For my money, he should be on the far-more-used 20, but none this less, this is a triumph. Hurrah.

Do Algorithms Have Business Ethics? (New Article Online) /

I’m happy to say I have an article in this month’s AMBITION, the magazine of AMBA, The Association of MBAs. The piece is entitled “Do Algorithms Have Their Own Business Ethics” (and you can read it online by clicking through on the title). Those of you who know me, or have read Rage, won’t be surprised to know that I think they do, but you may find this slightly different take on the subject interesting.

The Big Issue is AI /

This week’s Big Issue has the fabulous David Lynch on the cover, but inside you’ll find a full page article from me! The article is about face recognition and deep fake video technology, and its relationship to the dubious history of quantitative human identification in criminal justice, which spans back to the 19th century. But I don’t want to give too much away about the article, because you really should buy The Big Issue.

And not just because I have an article in it. The Big Issue is a publication that is trying to improve the lot of the homeless, by giving them the chance to sell the magazine, as well as supporting a charitable foundation that aims to end the poverty giving rise to homelessness.

So buy this issue, and read my article, but don’t stop there. Buy it every week. It’s not only a bargain (it’s a surprisingly good magazine) it’s an opportunity to change someone’s life, and possibly change the world.

I'm this week's guest on Tim Haigh's Book's Podcast /

If you’d like to hear me talk a bit about Rage, tune in and have a listen. Thanks for having me on Tim!

Cory Doctorow BoingBoinged Rage! /

I can’t even say how excited I am that Cory Doctorow has posted a rather glowing review of the book on BoingBoing, what is arguably the most popular English-language blog in the world. He calls the book:

a vital addition to the debate on algorithmic decision-making, machine learning, and late-stage platform capitalism, and it's got important things to say about what makes us human, what our computers can do to enhance our lives, and how to to have a critical discourse about algorithms that does not subordinate human ethics to statistical convenience.

You can read the full review here on BoingBoing, and I’ve copied it over to the Rage website here.

Thank you Mr. Doctorow, and I’m so glad you enjoyed it!

Publication Day! Please Feed the Beast and Review at Amazon! /

Well, today’s the day, and the book is now shipping (internationally) from amazon.co.uk. Those of you who pre-ordered in the UK should have copies next Tuesday, and others should have it soon after!

The book features a lot of examination of how algorithms create feedback loops that influence human society. Ironically, this is true for the algorithms that promote books at Amazon, so, if you are interested in the message in Rage getting propagated, one way to help is to go to the Rage page at Amazon and give the book a few stars. It’d be a real help to me, but more importantly, I hope it’ll help people get a more informed perspective on algorithms, so we can start changing their effects on everyone’s lives.

About Time! An Interview with Helen Bagnall /

About Time Magazine have put up an interview with Helen Bagnall, co-founder of Salon London and The Also Festival.

Salon is an ongoing series of events in London that focus on permutations of the theme “science, art and psychology,” and I’ve been fortunate enough to speak at a couple of their gigs, and attend a few more.

Also, in my opinion, is the best ideas festival in the UK. It’s sometimes called “TED talks in a field,” but frankly, I think that’s an undersell. If you like hearing from interesting people, and fancy a quirky, bucolic setting, there is nothing finer than Also.

And at the core of these events is Helen, who (even in this interview) is far too modest about her talents. She’s a born curator, and that’s a skill I really appreciate. One thing I talk about in Rage is that creativity comes from juxtaposition of ideas, and Helen’s events prove she’s a real master at that.

Her breadth of knowledge and insight (plus the fact that she must read 100 books a year) is one of the reasons I’m so glad she’s spoken kindly about Rage. You can read her comments here.

And I’m very lucky to be doing a talk and a couple of panels at Also this year, 5th-7th of July (see the events page and the Also site for details). I really hope I’ll see some of you there.

Algorithms and the evolution of graphics (and society) /

It’s very gratifying to have someone read Rage and say complimentary things about it. For instance, Chris Kutarna, co-author of Age of Discovery: Navigating the Storms of Our Second Renaissance (an excellent book, which I’ve previously praised), said that my writing…

“…accomplishes what few people could attempt: to humanize the discourse on artificial intelligence.”

(You can read Chris’s complete review of the book here).

But what’s really gratifying is that in his recent newsletter (to which I strongly recommend you subscribe), Chris shared insights from what he gleaned from the book, and I’ve got to say, he really got it. In particular, he very cleverly adapted a figure that I created. I, in turn, adapted that figure from another source, and I think it’s interesting to see the evolution, particularly since the figure is itself about evolution.

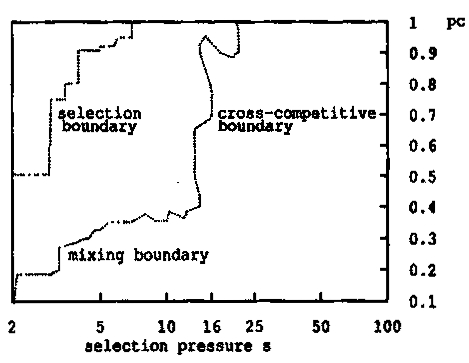

Dave Goldberg was my PhD supervisor, and in his excellent book The Design of Innovation: Lessons from and for Competent Genetic Algorithms. Dave figured out how selection (the process of deciding in such algorithms) has to be balanced with mixing (the process of creating new things by stirring them together) to get an effective genetic algorithm.

Here’s the original figure, which is actually made from running thousands of genetic algorithms on mathematical optimisation problems:

(By the say those three “boundaries” are derived from maths Dave did, and the actual algorithms he ran confirmed that his models were correct.)

Pretty obscure stuff, but as the main title of Dave’s book suggested, it has broader implications. In particular, what I observed in Rage is that the strict, quantitative “selection” of algorithms in our lives needs to be balanced with human beings mixing ideas, for us to have a society that is designed for effective social innovation. So I simplified Dave’s figure to create this:

Chris created the following figure:

And this is where he really got what I was talking about in Rage. This figure is about the perception that algorithms (AI in the figure) deliver something intrinsically more “optimal” than human choice (H in the figure). This is a false perception, predicated on the idea that in human problems, an “optimal choice” exists (which very often isn’t the case, for reasons I discuss in the book). That means that when algorithms decide for us, we are further out along the left-to-right “selection” access in the figures created by Goldberg and me.

Chris further adapted those figures, first showing a simplified form of my adaptation of Dave’s chart:

His new labels are insightful: the lower part is “Fragile” because false “optimal” solutions are insufficiently adaptive in one way, and the upper part is “chaotic” because it is insufficiently adaptive in another way. Then Chis introduces his finale figure:

And that’s the point, all summed up in a figure. To get effective evolution of society, we need to have an algorithmic infrastructure that balances pressure towards so-called “optimal” solutions, and the mixing of human ideas to create new, innovative ideas.

Thanks for mixing up innovative illustrations of the ideas in Rage, Chris!