It’s very gratifying to have someone read Rage and say complimentary things about it. For instance, Chris Kutarna, co-author of Age of Discovery: Navigating the Storms of Our Second Renaissance (an excellent book, which I’ve previously praised), said that my writing…

“…accomplishes what few people could attempt: to humanize the discourse on artificial intelligence.”

(You can read Chris’s complete review of the book here).

But what’s really gratifying is that in his recent newsletter (to which I strongly recommend you subscribe), Chris shared insights from what he gleaned from the book, and I’ve got to say, he really got it. In particular, he very cleverly adapted a figure that I created. I, in turn, adapted that figure from another source, and I think it’s interesting to see the evolution, particularly since the figure is itself about evolution.

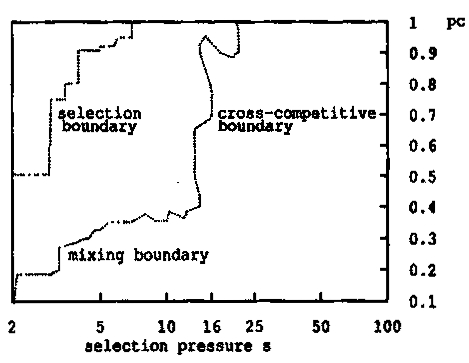

Dave Goldberg was my PhD supervisor, and in his excellent book The Design of Innovation: Lessons from and for Competent Genetic Algorithms. Dave figured out how selection (the process of deciding in such algorithms) has to be balanced with mixing (the process of creating new things by stirring them together) to get an effective genetic algorithm.

Here’s the original figure, which is actually made from running thousands of genetic algorithms on mathematical optimisation problems:

(By the say those three “boundaries” are derived from maths Dave did, and the actual algorithms he ran confirmed that his models were correct.)

Pretty obscure stuff, but as the main title of Dave’s book suggested, it has broader implications. In particular, what I observed in Rage is that the strict, quantitative “selection” of algorithms in our lives needs to be balanced with human beings mixing ideas, for us to have a society that is designed for effective social innovation. So I simplified Dave’s figure to create this:

Chris created the following figure:

And this is where he really got what I was talking about in Rage. This figure is about the perception that algorithms (AI in the figure) deliver something intrinsically more “optimal” than human choice (H in the figure). This is a false perception, predicated on the idea that in human problems, an “optimal choice” exists (which very often isn’t the case, for reasons I discuss in the book). That means that when algorithms decide for us, we are further out along the left-to-right “selection” access in the figures created by Goldberg and me.

Chris further adapted those figures, first showing a simplified form of my adaptation of Dave’s chart:

His new labels are insightful: the lower part is “Fragile” because false “optimal” solutions are insufficiently adaptive in one way, and the upper part is “chaotic” because it is insufficiently adaptive in another way. Then Chis introduces his finale figure:

And that’s the point, all summed up in a figure. To get effective evolution of society, we need to have an algorithmic infrastructure that balances pressure towards so-called “optimal” solutions, and the mixing of human ideas to create new, innovative ideas.

Thanks for mixing up innovative illustrations of the ideas in Rage, Chris!